A brief description of the goals of the project are to create a robot that is able to interact with the world in a semi-natural way.

I have been reading a lot about openCV facial recognition, and also the amazon alexa or google home type speech and talking interactions and realized that it would be awesome to have a robot that could a) recognize people (distinguish different people, and identify a person that it did not know), and then interact with the person using STT (Speech to Text) and TTS (Text To Speech) algorithms. A simple example would be to say “what is your name?” if it did not recognize you, and then possibly store a number of facial images from you that would allow it to later say “hello, Roland” for example.

How could this be done?

Well, here are my first thoughts on the subject. Following posts will be describing the progress that I have made so far.

-

TTS and SST: there are several dedicated *nix SBC (Single Board Computers) that have been built for hobbyist to accomplish this goal fairly easily. I have narrowed it down to a really good candidate - The Core 2 Respeaker board from seedstudio. (ReSpeaker Core v2.0 | Seeed Studio Wiki)

They have lots of tutorials and examples to play with that allow you to run a version of amazon alexa out of the box. It has plenty of processing power and also an on board DSP chip with 8 microphones that can do beam finding and other cool stuff to allow the little guy to hear you properly. -

openCV visual object recognition: Decided that a dedicated SBC was also the way to go here, and narrowed it down to the http://wiki.friendlyarm.com/wiki/index.php/NanoPC-T4

It has tons of processing power for an SBC and also 2 CSI camera ports to allow stereo vision capabilities (distance detection), and could easily run openCV and be able to recognize faces or other complex objects in real time. Have not have too much chance to play with this guy, but I bought it have started building it into the robot head design. -

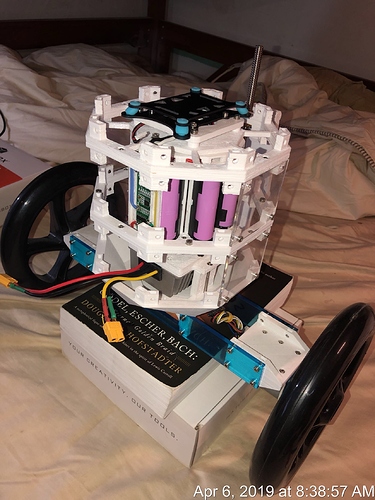

Balance Bot: because why not. A robot that can not demonstrate dynamic balancing will definitely not appear intelligent enough for the project. What way to scream “I understand my environment” like a balance bot. I was planning on building a big version of the balance bot on the ardurover page in order to save time and then think about how the speech and vision brains will talk to the wheels and direct motion.

Finally, there should also be a “rest” mode, so I designed the balance bot with a retractable “foot” that can be extended when batteries are low, or the robot wants a break.

Put it all together, these are the essential elements of the project. A balance bot, that will have a sensor head incorporating two dedicated SBC computers that talk to the body and allow it to see people, roll up to them, ask them their name, etc…

What I have acquired so far:

-

The core 2 respeaker

-

The NanoPC-T4

-

Two CSI camera modules for the NanoPC-T4

-

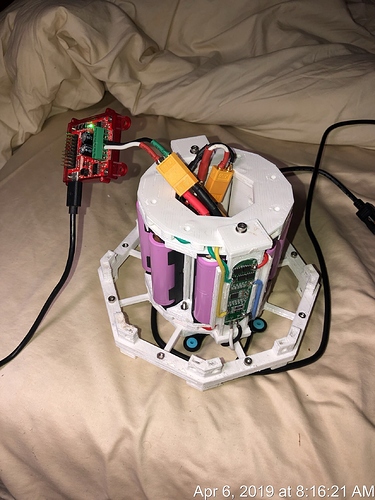

A pixhawk flight controller for the balancing

-

A motor controller, and two pololu geared motors, with nice giant wheels

-

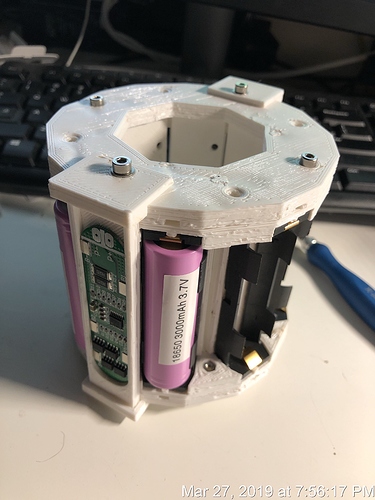

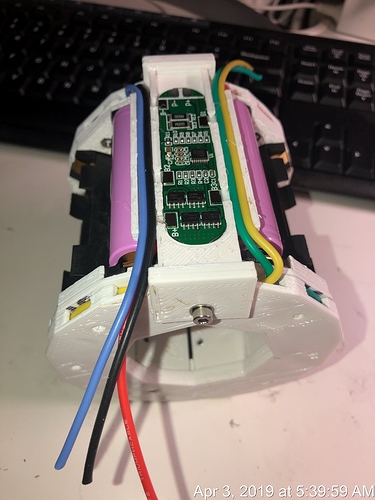

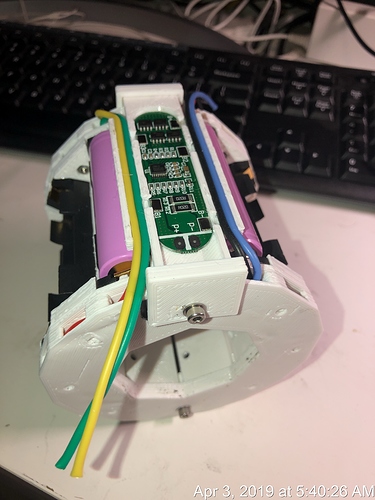

lots of 18650 Li-ion batteries for hours of robot fun (also battery holders and BMS boards for the custom power system

-

a high power 12V regulator to run the wheel motors

-

a small lazy suzy platform so the head can swivel

-

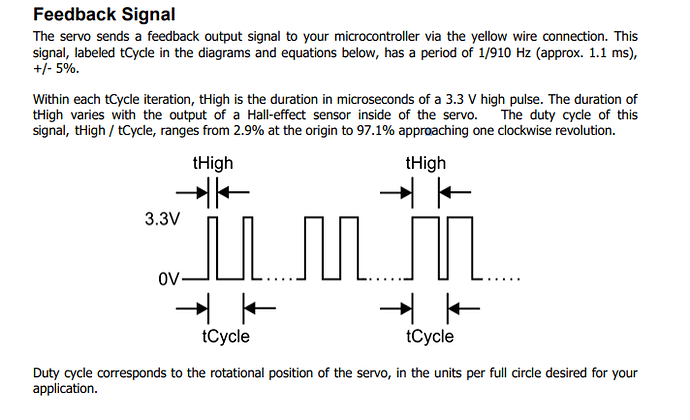

two continuous rotation servos that will move the head around

-

lots and lots of 3D printing to put all the pieces together.

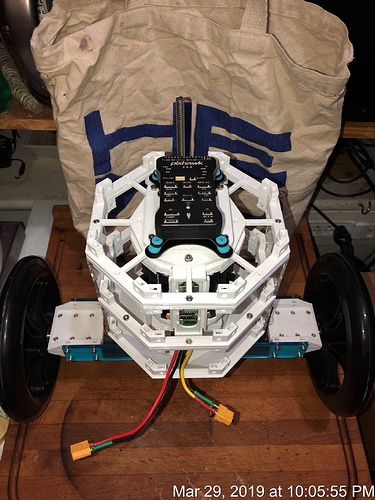

This is what the body looks like now…

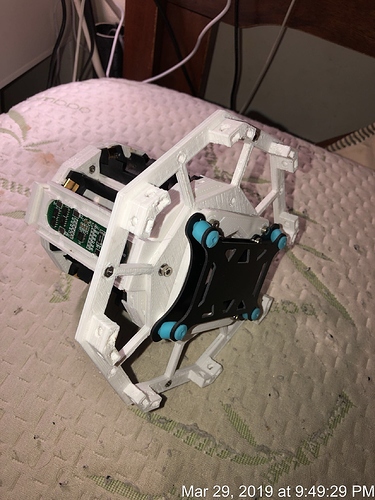

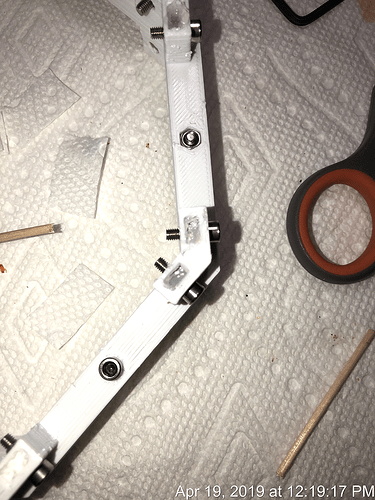

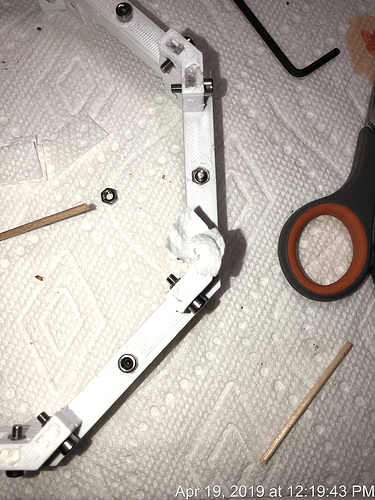

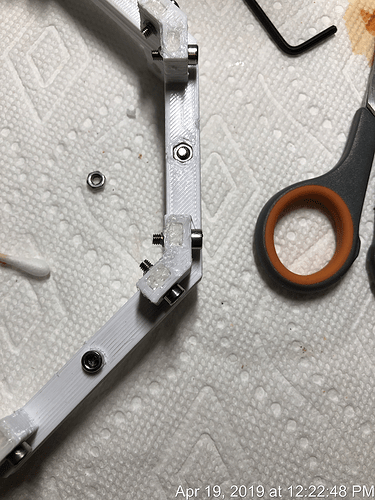

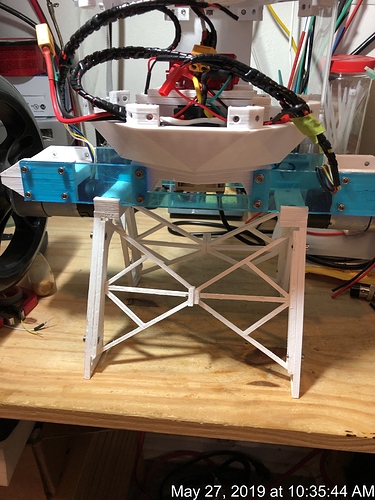

This is what the head looks like now, I am testing the servos that will move the head around and up and down

This is the head with the SBCs fully mounted to give you a better look at it all together

Stuff left to do (So much!, but lots of fun)

-

Finish the head movement mechanism

Servos are basically mounted but need to be connected to the SBC with some simple test scripts.

Also want to install some optical stops so the head can not break itself by rotating too far. -

Finish the power system - this is fairly close. I have the right wire on the way, and have regulators for 12v and 5V up the wazoo to power the wheels and the SBCs all at the same time. Also have mostly figured out how the wiring will be routed.

-

Start programming the vision SBC and speech SBC to talk to each other and also talk to the pixhawk. This will be the biggest challenge once the physical beast is put together, making it move around and perform simple checks and tasks. Super simple tasks like recognizing objects like pencils and coffee cups, and then saying “pencil” and “coffee cup” will definitely be one of the first cool applications. Object following with the head will also be very cool at first.

Here is a link to some extra documentation on google I have been making along the way, with some parts that I purchased.

Finally, why is it called Charlie? Well, no good reason. I played around with a lot of acronyms that spelled “ISAAC” (Interactive Speech yada yada) but they all sounded very boring and laboratory. I wanted this robot to interact with people, so I wanted it to have a friendly name. My girlfriend chose Charlie.

What do you think?